Co-Creative Data Sets

A series of co-creation workshops to teach machine learning literacy.

A series of co-creation workshops to teach machine learning literacy.

Creating ethical AI tools is a difficult task. Various harmful effects, such as mass extraction, labeling, bypassing consent, and the loss of context, have been recorded in recent research [2,3,10]. Other work investigates explicitly how racist prejudice and misogyny are encoded into training data sets that subsequently inform machine learning models [5,8,9,11]. Moreover, not only does misclassifying categories cause harm, but also the failure to include marginalized voices altogether [1,6].

In the current data economy, information about us is often appropriated in goal and outcome-oriented ways. Work across the HCI community argues for alternative avenues to engage with data in ways that are ambiguous [4], soft [12], or slow [7]. Data sets are often very uniform as they serve the purpose of creating efficient outcomes.

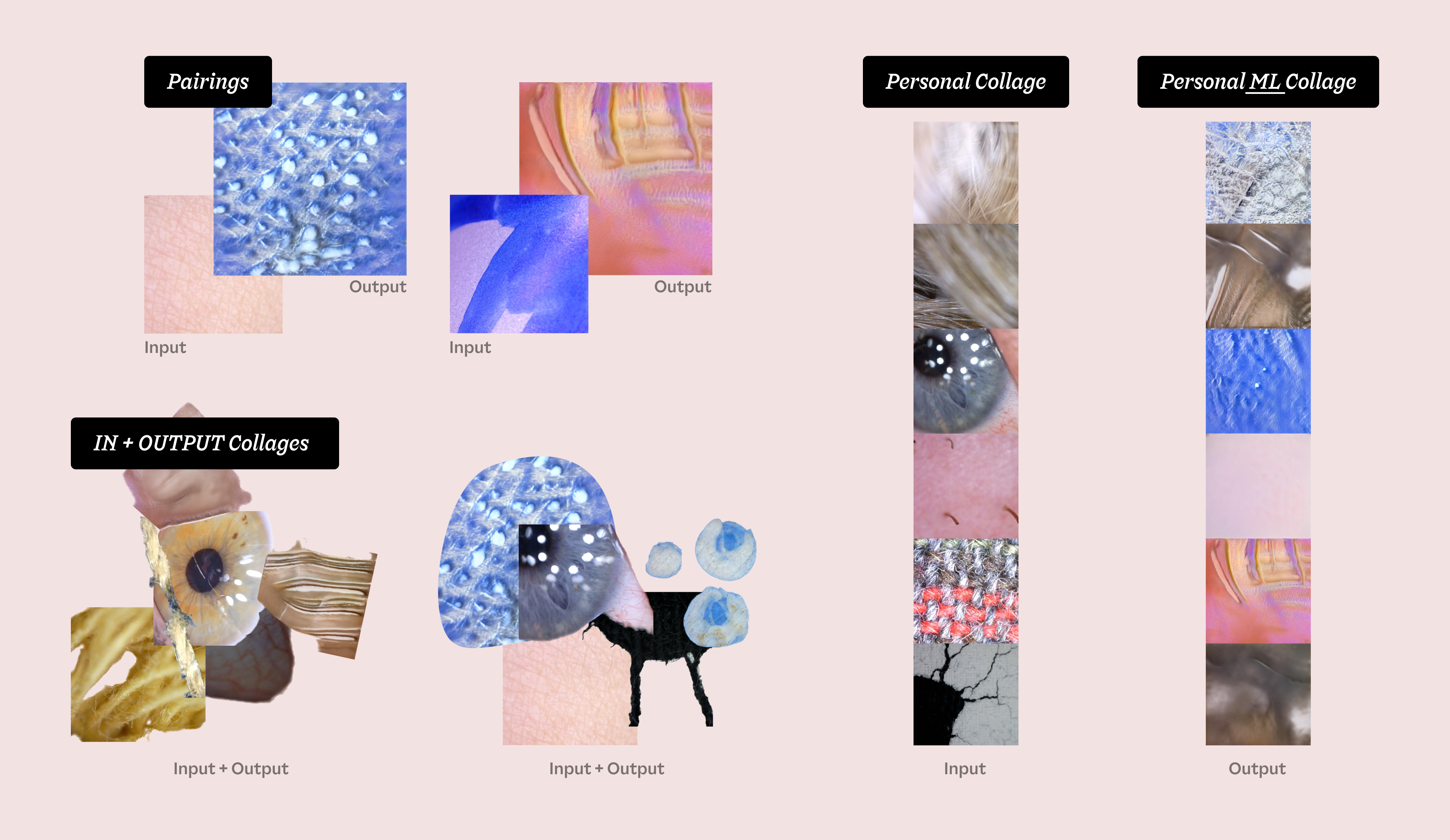

To explore these inquiries, I undertook a design research expedition utilizing techniques such as co-speculation and co-creation. The preliminary workshops consistently included a tangible device that facilitated participants in creating image data sets, both small and vast.

Once the StyleGAN2 models completed their training on the co-created data sets, individuals began experimenting with the results. The aim was to recognize specific similarities between the input and output outcomes. One participant utilized the styleGAN2 model to create a video sequence, and then matched it with a custom GIF made from images in the original dataset that displayed a visual symmetry.

As part of the 2023 SFU Summer School held in Burnaby, I organized a one-day workshop in collaboration with the Imaginative Methods Lab and Communications Department at SFU. The program focused on developing critical digital literacies among students in grades 10 to 12. The aim was to encourage them to explore data in a fun and critical manner. Specifically, during the data selves week, I hosted a data collection workshop.

In this first exercise, the goal was to create a data set based on personal objects that the students brought to class they felt represented by (e.g., their skateboard, a doll representing their heritage, or a ribbon for hokey triumphs).

In the second exercise, we discussed the challenges of comprehending the scale of extensive training data archives. Together, we investigated what a unique hand archive of this class will look like.

For the last exercise, we described data scraping and how large image training sets are created. As a metaphor, the students swopped into the role of a data scraper, extracting images all around the SFU Burnaby Campus.

Team: Nico Brand

Consulting:

Gillian Russel,

Samein Shamsher

University: Simon Fraser University

, Fall 2023

Special Thanks:

All the Kids & Participants, Samuel Barnett, Matt Desimone